A Familiar Scenario

Imagine you spent an entire weekend writing a paper for your Instructional Design course. It’s a lot of work and you’re unfamiliar with the content. You dedicate a few hours to reviewing the syllabus, assignment description and resources and you feel pretty confident in your final result.

When you get the grades back, you’re shocked to see a C+ next to your name. Under the feedback section, you get the following comment from your instructor:

“You’re just not getting it. Reread the diagram on page 2 and then resubmit.”

As the learner, take a moment to jot down your internal reactions and external actions. I’ve shared mine in this chart:

| External Actions |

Internal Reactions |

| Reread the syllabus |

Confusion |

| Revisit the diagram |

Frustration |

| Contact the instructor for clarification |

Demotivation

|

| Email classmates to ask them for help |

Resentment |

Looking at the internal reactions, we can see that escalated quickly, didn’t it? I’m sure the instructor didn’t mean to imply that I, the learner, hadn’t done my due diligence in reading all relevant materials, but that’s what it feels like. I might reach out to other participants to discuss my confusion only to find that they felt the same way. A picture begins to emerge – one of miscommunication that, when repeated, can quickly snowball into a negative learning experience.

This example is applicable to any educational setting and is indicative of a few areas of contention that I have experience as both a learner and an educator. We’ll break down the example the statement to find out why it fails to be useful.

This statement implies that there is a flaw with the learner that prohibits them from grasping the content. It’s a variation of the old “try harder”, as if effort alone is all it takes to learn. Additionally, as described in a previous post, it can feel like a personal attack.

This statement implies that there is a flaw with the learner that prohibits them from grasping the content. It’s a variation of the old “try harder”, as if effort alone is all it takes to learn. Additionally, as described in a previous post, it can feel like a personal attack.

What’s not to love? It tells the learner where to look, which some might categorize as a helpful hint. The problem here is that the instructor doesn’t acknowledge their responsibility to expand on or further clarify the instructions. If the learner stared at the diagram for thirty additional minutes, would that somehow influence her comprehension? Should the instructor provide more context or other support to ensure the learner understands the content and that they remain motivated throughout the course or session?

The answer is yes, that is exactly the hallmark of a good educator and, if you’re prepped appropriately for the topic you’re teaching, it doesn’t take much to make adjustments.

Corrective and Confirming Feedback

Using the same scenario as above, imagine if you received this feedback instead:

Not quite, but you’re on the right track. You’ve done a good job of explaining x but y is missing. I recommend reading resources 1 and 2 again and using z to frame your answer.”

It takes a few more words, sure, but it accomplishes several things:

- Sets a positive tone

- Call out of what is right (because no one likes to be wrong all the time!)

- Calls out areas of improvement (after the praise)

- Suggests concrete ways to improve

- Provides additional resources and/or context

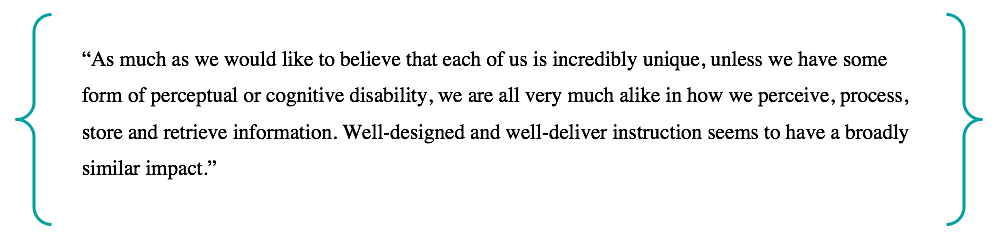

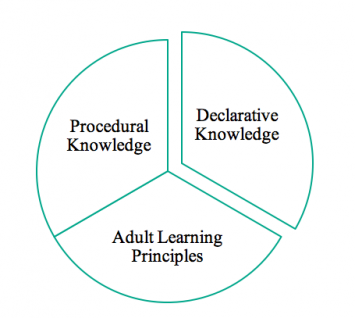

Using a combination of corrective and confirming feedback empowers students to explore topics independently, while looking to instructors/facilitators/trainers to provide guidance and support. This doesn’t mean that you, the educator, needs to handhold, coddle or give all of the answers away. Instead, it shows that you respect your learners and their ability to learn in ways that are best for them, as well as showing your support for their educational journey.

Think of the last time you learned a new and complex topic. If someone had offered guiding tips and suggestions that help you relate the content to something you already know or frame it within the context of your current life, wouldn’t that have made the experience not only more enjoyable but more effective?

What do you think? Have you used corrective and/or confirming feedback? What have been the results? As a learner, what type of feedback are you used to receiving and how does it influence your learning experience?